You’re already selling ahead of your roadmap and your dev team is getting pretty big. In this BoS USA 2015 talk, Trish Khoo outlines two approaches to keeping pace and quality high without hiring an army, drawing on a decade of software testing at Campaign Monitor, Google and Microsoft.

Find Trish’s talk video, slides, transcript, and more from Trish below.

Find out more about BoS

Get details about our next conference, subscribe to our newsletter, and watch more of the great BoS Talks you hear so much about.

Video

Slides

Learn how great SaaS & software companies are run

We produce exceptional conferences & content that will help you build better products & companies.

Join our friendly list for event updates, ideas & inspiration.

Transcript

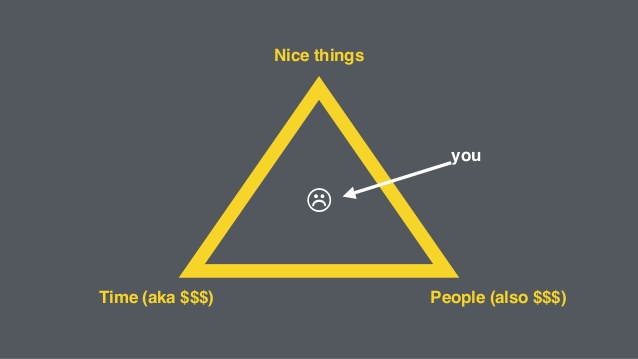

Trish Khoo, Google: Hey, everyone! I’m Trish, I work for Google and I’d like to talk to you about software testing. I’m going to talk to you about quality. First, though, I would like to talk to you about tradeoffs. Has any of you heard of the iron triangle of software development by any chance? Yeah? There’s a couple of people. Ok, for those that don’t know, this is basically a triangle that software people use to justify to ourselves why we can’t have nice things. It looks like this, yeah. So nice things being things like quality for instance in our product. And in order to have things like quality, we have to sacrifice things like time, also known as money, or we have to add more people, also known as money. And guess which of these things is usually the one that takes the hit. It’s the one that isn’t money. So what I do at Google is I help teams to enter a triangle free world. I want teams to be able to get all of these things, to have the quality of software that they want, as well as having that at speed as well as not having to hire a few people to do that, and it’s possible. I used to think it wasn’t, definitely is. And I even know that there are teams that don’t even have a QA anymore at Google, Microsoft, Pivotal labs and many companies around the world.

The dream is real and additionally this is how it actually helps you scale. Because I’m at Google, and Google is all about scale. So this is a type of scale that we deal with at Google on a regular basis in the area that I work. I work in the area of Google that worked with geographical data, massive ones. And these are the types of numbers that we deal with on a regular basis. We’re talking big traffic, data and impact and also big risk as well. Things like over a billion active monthly users of Google Map services, over 2 million apps and services that are depending on the data that we’re curating and we’re even getting these tens of thousands of data by real life users as well. Scary stuff! And to top it off, we’re also a little bit obsessed with quality to the point where Google Maps is even accounting for shifts of the tectonic plates underneath the earth. We’ve got the world maps, 200 countries and territories, North Korea is even in there somehow. Don’t ask me how! Street view imagery is available across 66 countries, this is including parts of the Arctic and Antarctic so from the comfort of your living room, you can walk around with the penguins. And satellite imagery is covering a significant amount of the world surface, to quite a high level of resolution so that you can see your house from here.

But how do we get to be able to do these amazing Google things and Google quality and scale?

So what I noticed and what other people have also noticed such as my friend Simon Monashy who is the director of a venture capital firm in London, he works with startups and he was saying to me something that really resonated with me, because what he finds when he’s doing his due diligence is that small companies often hit the point where the fast technical decisions that they’ve made along the way to get the revenue very quickly has hurt them when they got to a certain size because it’s created these scaling difficulties after that. And this is something that you saw Jeff talk about earlier, tall Jeff, when he was talking about technical debt – I know he had this graph where it seemed to go up and there’s this wiggly thing happening in the middle and I think it was the hot coals of software growth. So this is the same thing that I’ve noticed and also investors and what I noticed it because it usually has an impact on my inbox because about this time, this is when typically executives will make decisions about quality and quality teams and I will get something, usually in the form of an job add in my inbox, because somebody has made a decision like this.

So quality problem, quality solution, hire a QA assurance team

It makes sense, right? When I said QA team, I am talking about hiring a team of manual testers usually to come in and help assure that the product is the quality that you want. To test it out, and this is usually people using the system like you would imagine a real user would so they can give you feedback on the overall quality of your product so that you can fix it. Don’t do this! So you may have some people already and thinking of outsourcing this and hiring a brand new team to wage war against the bad quality of your software. The problem with this is that now you’ve got a team of people who is accountable for quality in your software and they have no power to affect the quality of it directly. People are reporting issues but they can’t fix them. There’s an old QA joke and you’re gonna find this hilarious – where it goes how many QA people does it take to fix a light bulb? And the answer is none because they will just tell you that the room is dark. I thought that it was funny. So this is the problem with them.

The main problem and the reason it doesn’t scale is manual testing is very slow especially when you’ve gone to a simple product. Manual testing is really fast in a simple product and it is the best way to be testing a simple product. But when you get to a complex product, then manual testing becomes really, really slow because there are so many parts that you can now take through your system, somebody manually accessing all these parts in your system takes a really, really long time and people are having to do this for your entire complex system every time you want to launch something new, in case somebody has broken something when they implemented your new, shiny feature. Because usually they have. So then the next thing turns up in my inbox and the next type of email I get because another decision has been made hiring an automation team to fix the testing problem. And this is when we have a team of people who can write automated tests, replace the manual tests that were happening before. And this is usually with the approach taken along the lines of – I have a whole lot of manual tests, let’s make them faster by writing a program that will execute the test so a human being doesn’t have to. It’s a lot faster – in theory this works really well because automated testing is faster than manual testing. The problem is that usually this is approached in a way that isn’t terribly scalable. So automated testing good, automation team bad.

This is typically the mail I get in my inbox, the reason why this makes me cringe is because – the blimp I’m all in favour of, I love the blimp but it’s other things that I’m not a fan of. You can see that low coding skill is ok, for the test automation engineer jobs. These are jobs which are seen as not so skilled in terms of software development, generally seen as scripting work. Even if you wanted to hire a really good software developer to do this, it’s very unlikely that they would because they are making less money doing this, it’s a much less glamorous position and they’re going to work with a lot of people whose coding skills is not that great.

So the other thing I see people doing to address this coding problem is if they have a hard time finding good people to do this, they go let’s teach our manual testers how to code them because they’re doing the same job, apparently it’s not that hard. How hard can coding be? It’s only 10 lines or whatever. And then they’re writing these tests on behalf of the developers so you end up with this big, unmaintainable piece of software that’s testing your software that’s not only really difficult to maintain because the code’s a mess, but it’s usually unreliable. And this is also because this is a separate team that is taking a really full stack approach on test automation which is unreliable and it’s going straight to the UI – it’s trying to do things that users would do. But we don’t really have technology that can be acting like a human being yet. This artificial intelligence is beyond us at this point, hopefully we’ll end up one day and I’m sure the first thing we’ll do is apply it to software testing.

So one of my friends recently described this as hiring a team of people to encourage bad practices within your development team. Because what you’re doing is getting a group of people to say verify what another person has made work correctly which doesn’t really sound right if you think about it for a minute. So we went through this ourselves, nobody is really excluded from this kind of change I think. About 7 years ago, Google teams were actually doing a lot of testing for releases, it was a lot of manual testing and it took up the majority of our releases. We realised at that point that we wanted to get faster and we needed to get faster in order to compete in our market. Once or twice a month releases were not gonna cut it anymore. So after some amount of time, a few years, we’ve gotten to the point today where teams are releasing even multiple times a day and there was no decrease in quality as a result of this.

So what’s the secret? The whole team has to commit to quality.

We don’t have a separate group of people where quality is your job but you can’t do anything about it. We have everybody in the team committing to quality as being part of their jobs. This means developers, product managers and you, miss or mister CEO, it means everybody has to commit to quality as something that we care about as a team and we’re gonna do our best to actually make sure that it happens.

Now let’s talk a little bit about this testing phase, because I’m going to tell you why software launchers can be really painful if testing is not done in a really great way. Anyone of you heard of the testing phase as a leader? It’s usually because it’s around the time when you’re asking why isn’t my project done yet and people will say it’s still in the testing phase or they will say something like we’re still fixing bugs. And another symptom of this is users ask then when is it going to be done? And usually the answer is something along the lines of we have no idea or something that is more like yes, definitely by Friday but you know it really means we have no idea. So this is a symptom of having this find and fix kind of thing that’s happening around this time. You can actually try to predict when something will launch if you have a testing phase, it just requires a lot of human hours to manage it and you will end up hiring somebody almost full time to manage this process with graphs and fixed rates and the amount of bugs we have now and you will see the graph go down and you have 0 bugs but you know tomorrow it will bounce back. And that’s what we call 0 bug bounce and you’ll see it bounce. We’ve got 0 bugs! Then we can launch. And that’s not even the real amount of bugs because every day you’re doing a triage meeting and the triage meeting is designed so that you can look at the amount of bugs you have and you can negotiate with everybody to work out what is the least crappy product you’re willing to live with in production? We just need to get this thing out the door! And as well as that is a huge waste in expensive engineering hours and I will show you why.

Because this is what’s happening in the development teams. If we have a look at a typical development team, we’ve got something like a developer, tester and some kind of product owner, often a business analyst of some kind, project manager, whatever you want to call this person, somebody who cares about product requirements. And what will happen is you’ll have the developer – we’ll be giving a new feature to the tester, the tester will have to drop whatever the heck they were doing and then have a look at this feature and then they will find something wrong with it, inevitably they will give it back to the developer, the developer will say don’t bother me, I’m busy! And then when they eventually get around to looking at the bug, then they will have to figure out what it means, reproduce it, debug and then they have to give it back to the tester and say did I fix it or not? Because I don’t know. And then the tester will usually say something along the lines of yes or no and you introduced 2 more while you were at it. Anyway – we’ll just log them and move on. And then that goes back to the product owner and he will say either yes, that was exactly what I wanted – good feature, thank you everyone! Or no, actually now that I look at it, it’s not exactly what I wanted so can you just go back and make just a couple of little tweaks? We’ve still got time with the schedule, that’s fine. And it goes round and round and you know these loops and feedback loops that you see here? These can be hours, days, weeks. Weeks of launch time. This is weeks between your idea and you getting that idea into your production. And a lot of it is just waiting around, it’s waiting for people to know things and that something is broken and they need to fix it and that there is a problem and something needs to change and a decision needs to be made.

So I’m going to step back a little bit and ask what is testing actually anyway? Because when I usually talk about being a tester or being in a test related role, people usually assume that I’m in a role that involves something along the lines of manual testing, as in I’m doing what he’s doing and I find bugs and that’s kind of it. Professional, sophisticated software testing is an approach that lets people get the information that they need at the time they need it, in order to make timely decisions. And it can be a change to your process, the introduction of tools that will allow people to do this more easily, it can be a wide range of activities.

Now the easiest and the most well-known aspect of testing is called checking. And checking is exactly what it sounds like, it’s like having a to-do list. Can I log into the app? Can I buy a hamster on Amazon? Does my company logo still look like underpants? Things like that. Everybody can do testing, ok? It’s super easy and everybody should do testing. This is what I was told in grade 3 of school. Check over your own work make sure it’s fine before you hand it in, that kind of thing. What a change that makes! Now we don’t have a tester that’s making sure everything works along the way. We don’t have a separate person checking everything for everybody. The developer is doing their own testing and is writing automated tests at home. And he can instantly know whether or not something is broken once I’ve implemented something and they can immediately fix it. It’s preventing problems before they happen.

And then the product owner preferably is checking over their requirements. The developer is talking to the product owner, making sure that they understand completely what it is that they were supposed to be making and that’s gonna help them save a lot of process in the making sure that it’s all right. And then this saves all of this wasted launch time and it gets done quicker without having a very terrible bug finding testing phase at the end. You notice I took the tester out of there and it doesn’t look great for my own future career prospect, something with testing in the title.

I want to emphasise checking is really easy, testing is really hard. So the checking part everybody should do. Having a testing expert in your team is going to help you to make that process better overall. And this actually helps you get the most value out of your testing expert because they’re not wasting their time, doing these basic checking activities anymore. They are actually looking at your overall process, helping your team work more efficiently, more productively. And they can even make a development tool if you hire an engineer as a testing expert, because there are engineering tester experts back there. These people can help you make internal tools that are going to help your development team become more productive or if not make these tools, at least research the tools and help introduce them and configure them properly for the team to help the team become more productive.

The hard part – change

Ok, here is the hard part. This is the hardest part of my job because this requires a massive change in culture through the development team throughout the whole company; and is the reason why I wanted to go to this conference not just go to a development type conference and tell them all start testing because this has to come from the top down if it’s ever going to change. So these are the types of excuses I typically hear whenever I say to a team hey we’re going to do things differently now, we’re going to have developer testing and PM’s checking their own work. So it’s an automated test. I get a lot of excuses and a lot of forms, but if I sit down with them for half an hour and ask them what you really mean, then it really boils down to these 3 answers. And these 3 answers are generally solvable from a management level.

First of all is we don’t have time. That’s a big one. Because I don’t usually meet engineers who want to write bad code. But the problem is that when you give an engineer a deadline and say I just want this thing done, that definition of done is fairly flexible. And I’ve worked in companies where the developer who has produced the worst, buggiest code you’ve ever seen is the one getting rewarded for being the best developer on the team because they are the ones that are delivering fast, apparently. They are the ones that are getting it done quicker because they changed the definition of done to you’ve just done the minimum you need to do to get it to somebody so they can see what it is, right? So the thing is that if you’re explaining the concept of launch time to them and the time you’re saving in launches, doing that due diligence earlier may take more time to get to a new definition of done, but it’s gonna get everybody to a more predictable level of done faster overall. And that’s the thing that needs to be made transparent to the teams in order to buy into this concept of not having time and it needs to be more apparent to the management level or the leadership and the scheduling people, because they’re going to see initially it seems like we’re taking a longer time to get to done.

But what they’ll see if everything goes correctly is that even though it’s getting a lot of time to get to that level of getting it done, we’re reducing this big testing phase at the end so in terms of launch time, we’re getting everything done faster. We don’t know how this is a big problem actually. In the software industry and computer science degrees, we don’t often get taught how to do good testing. I remember from my computer science degree I got taught about uni tests and that’s it. Most people learn testing practices on the job or if they actually take a self-interest in it, they might actually learn themselves. It’s not something that sounds exciting enough so that you go and learn on your own time so it just doesn’t generally happen that way but sure! It is going to require a level of skilling up in a team of developers who aren’t used to working this way. But it’s a worthy investment and it’s worth hiring new developers who actually know how to work in this way to train the other developers, because it’s difficult to just tell someone learn this skill and they don’t actually know how.

And of course the last one is not the way we work at this company. Of course, if people don’t feel like they’re getting rewarded for working in this way, then there’s no incentive for them to do it, because it feels at first that it’s going slower and previously it could be that they won’t get rewarded for getting things done right, but getting them done fast. So it needs to become the new norm that people are getting rewarded for doing things the right way and getting quality things out – this is the new standard of expectations across the company.

Hiring

So I touched on hiring a little bit, obviously if you want developers to start testing, we need to start hiring developers who can test. It’s pretty simple to add to the interviews in terms of coding. We’re already asking them to code something and test that code. That’s a pretty simple way to at least get started. There are ways as well as looking for people who know TDD, things like that. But what I really want to talk about here is talking about the type of testing experts you can hire, because at Google, we hired two types of testing experts for our teams. So we don’t hire full time manual testers, we hire engineers.

You will notice that the first role there doesn’t have the word test or quality in the job title at all, there’s a good reason for that. It’s because like I said before, it’s very hard to hire very good engineers who are willing to have the word tests and quality in their job title because across the industry there’s a little stigma across that. So what we have as software engineering tools and infrastructure which is a good description of what they do anyway. We hire these engineers at the same standard as regular Google software engineers which if anybody knows Google’s hiring process is a pretty damn high bar! And the job of these people is either to be creating new tools and infrastructure for teams to use but then they will also be sitting with development teams as a peer, sitting with them saying hey, have you tried writing a test for that? Look how easy that was! And now we can tell if this thing was broken before it gets pushed into some test environment and breaks everybody’s day. So this is a critical part of our engineering productivity mission.

Test engineer is a role that is higher in testing experience. They look at processes and at the entire way that the team is functioning, look for ways to make everybody more efficient. They’re also an engineer and have the ability to understand the complex system architecture, make recommendations of new tools and approaches that we can use to make things better. Test engineers are usually hired not as higher bar as the software engineers in tools and infrastructure in terms of technical ability but that’s because it’s counterbalanced with a high expectation of testing ability. But the reason for this is because it’s very hard to find somebody who has this level of testing experience and this level of software development experience because people only have 8 hours of productivity in them a day. So test engineers do tend to be, I would say – somebody once said to me that they would be a lead developer in just about any other company if it wasn’t Google, but this is again, this is Google’s hiring standards so the emphasis is on the testing engineer experience.

It’s important if you find somebody who can do this job that feels they are first class within the team – I say this because people are very hard to find. I know, because I try to hire them all the time and if you find one, hold onto them for dear life, because otherwise somebody like me will steal them away from you. As well as that, this person’s job is usually to convince people in the team to do what they don’t want to do and in order for them to be listened to they have to be first class citizens within the team. Like I said before, people with tester quality in their title are often seen at the same level as manual testers in some regard. It’s often not seen as a skilled role so in order to make them feel like a first class citizen within the team, it has to be recognised in terms of salary and job title to make sure they do feel like they have the authority to change things within the team. They need management to back them up, otherwise anything they say will have no effect and it will all be futile.

So one important thing that we also learned when we changed Google was; we learned that learning was good.

We don’t want to blame people, we want to be able to empower people to learn from mistakes and let people feel like it’s ok to make mistakes as long as we’re learning from them. And just getting feedback in general was a very good lesson that we learned here.

Well how we do this at Google is we have a postmortem culture. It’s a document that somebody writes who has the most experience on a failure incident. And it is a factual timeline event and it may name names but in a factual way, not offering any kind of opinions or blame or anything like that and it will have suggestions after that with things that we can do and never let this happen again, right?

And then we’ll have these practical items coming at the end of that, so that people will actually take some action as a result of this. So we’re doing something after we learn, we’re not just musing on the incident and then not doing anything about it. And the great thing about this is that people learn that it is ok to make mistakes and take risks. The other thing is that if people feel like they’re going to get punished or blamed whenever they make a mistake, they’re not going to tell you about mistakes that they or other people have made because they like their co-workers, they’re not going to rat them in. So if you have a culture of people who feel like they can actually tell you about mistakes then you’re going to learn about potential problems earlier rather than later.

Launch and iterate. This is what this entire thing allows you to do. Now we’re getting to the bit where we’re going through all this work and you’re getting the payoff off of it. Faster releases mean faster feedback. So if we can release once every day means that we can get feedback from our customers every single day on what we’ve released to production. It means we can make changes every day and we can pivot on those changes and we know things sooner rather than later so we don’t waste as much time. Releases are cheap and we can make changes as fast as we can think of them. That’s probably a dangerous thing, but there’s also a very powerful thing.

One thing that we like to do at Google is experiment. It’s a way for us to make changes in production without necessarily having the huge risk associated with that. If we want to try out something crazy, we can try it out at a small percentage of our users, we don’t have to try it to everybody at once. Let’s say we want to figure out if the red button works better than the green button. Do people even notice a red button on a red background. Who knows? Maybe it’s a cool, new idea! Let’s try that out, but we’ll only do it to 10% of the users, and the rest of 90% can have their normal experience just in case that it’s a silly idea that the designer had in his sleep.

So we can do that, we also have things like dog food. Dog Food I think it’s a brilliant way of trying out new ideas within your company. Dog food comes from the phrase eating your own dog food. It’s something that sounds unpleasant but it’s really about trying out your company’s products internally before you release them to the world. This is a great way for your company to find edge cases and usability issues, not functional issues which should be found before. But it’s also a way for your company’s employees to get a little bit closer to the product you’re making. Obviously it doesn’t work for all products but if you have a chance for your employees to actually be users of your own product, it gives them a sense of owning that quality as well. They have to use it so they’re very much invested in making it a good product.

And let’s recap here, what you’re getting out of this? Of course, you’re getting better quality software. You can change product direction quickly but ultimately what this is giving is the freedom to innovate and innovate is the fun part of this. So that is the best and most compelling reason I could think of as to why you would want to do this. So I left intentionally a bit of time for questions so please, ask me some questions!

Learn how great SaaS & software companies are run

We produce exceptional conferences & content that will help you build better products & companies.

Join our friendly list for event updates, ideas & inspiration.

Q&A

Audience Question: I win! Hi! Thank you! First of all, Mark, will you please make this video the first one that you post? I have a dev team that needs to see this and a QA team that doesn’t want to see this. My question is on regression testing. Now I can understand how you developers want to actually test what they’ve been working on. Regression testing is somewhat important. Are you suggesting that they do that as well so they kick off their test to make sure what they did and they kick off automated tests or whatever they do? You do think that’s the best way?

Trish Khoo, Google: Yeah, absolutely! In fact, regression testing is the main thing that I want them to be doing. It very much feeds into the checking activity that I described and it’s the poster child of checking basically. It is just a checklist of what still works and actually a developer once said to me that he sees automated regression tests as protection for his code against other developers. I know he was kind of joking there, but it’s kind of true because sometimes it’s not just about knowing if you’ve broken the things that you’re expecting with the tests that you’re running, but you’re running other developer’s tests. So that’s actually protecting you against things that you didn’t know about. That’s where a lot of regressions come in, is because often developers are working with code that they haven’t worked before, they don’t know everything about that code and how it’s supposed to work. Maybe the whole team has changed and nobody knows how that code works anymore. And your automated regression testing becomes a way for you to automatically check everybody’s years’ worth of knowledge across that code and it acts as documentation as well to tell you what that code was ever supposed to do in the first place.

Audience Question: So, a bit of big fan of the Joel test and tall Jeff reminded me of it. We make a SASS based application, cloud based and we deploy it for production 20 times a week. Our developers doing their own testing and we think it’s an important part in closing the feedback loop, but there were three things when he reminded me of the Joel test. Specs, testers and a schedule. There are three things that we don’t have and I felt bad about it, I was talking to Eli here about it, maybe we didn’t do it because of the feedback loop and having a separate group and that sort of thing. And those things seem to be 3 heads of the same beast, specs, testers and schedules because if you have a spec you have to have a schedule, if you have a schedule you have to have a spec and if you have a spec, you have to have testers and if you have testers you have to have a spec. They all seem to be related. My question is – when I saw your stuff, I actually went back to where we were that the faster cycle time and being able to release faster and having the developers say software is a better thing and my question to you is with tall Jeff, if you want to duke it out with him on this, is there a common ground here or is it a either or?

Trish Khoo, Google: When you say common ground you mean between having the spec and testers on team?

Audience Question: Well you don’t have a specific – you took the testers out of your diagram and have them as part of the developers doing it, part of the Joel test is you have separate testers. So is there – we’ve kind of chosen to go your route, but I was questioning that at lunch, I was –

Trish Khoo, Google: I think it’s interesting that that’s on the Joel test because I agree with most of what is on there. I think that I would reframe that question of do you have testers in terms of how do you do testing. And I would be looking at that response from the team, whether all of the testing is done by the developers and if it’s done by them, how are they doing the testing. Often, I’ll get the response back oh we do unit testing, but that’s not really enough. You need to look at how they’re doing their integration testing, regression testing. Just really looking at their testing practices in general, whether they are using an outsource QA team, we’re kind of testing problems do they have? What kind of bugs do they have? How are they fixing bugs? Are they fixing them as they go, are they having to allocate a huge sprint time to just for fixing bugs? Just figuring out how this team works in terms of testing activities and bug resolution.

Audience Question: Ok, I see a couple of problems with developers being testers. So one is like they might know their scope before, but if you look at the bigger picture, you might have 50 developers and the software needs to work as a single piece or unit of work. So how do you address that problem of testing a bigger picture, like the integration testing, like I guess you’d need QA team to take care of that. So that’s one problem and the second problem is if a developer understands the function requirements the wrong way, they’re also going to test it in a wrong way, right? So how are you going to address this?

Trish Khoo, Google: Yeah, so what you mentioned, there’s a couple of points that I want to make here. And what you mentioned about developers misunderstanding their requirements, that is a big problem on a lot of teams. Finding these requirement gaps is a problem I see many development teams and this is why there needs to be a lot of good planning for any complex project. We’re analysing what the requirements need to be, even if it’s just a small set of requirements because you’re only doing Agile and a per feature way. It really needs to be thought out in terms of user scenarios, even just writing out user scenarios is a good way for product owners to verify that the way they’ve described the behaviour is correct. Bringing in somebody who knows the piece and well product owners should be across this and having a testing expert as well, somebody like a test engineer, it can also be an advisor to this, but really it’s something that developers and PM’s need to recognise as a critical part of the software process just figuring out that stuff at the start. You mentioned integration testing. One thing that I notice a lot of teams try to do is they have unit testing and they have end to end testing and they don’t have anything in between. And the issue with this is that I love unit testing, it’s great! I have a lot of unit tests, it’s fantastic! End to end testing is a massive pain and having these smaller tests in between that are verifying how does this system work with this system. Is this API working as expected? Let’s take away the UI and see how the system works underneath that for a second. These sort of in between level integration tests is really crucial because debugging is awful when its end to end. And you can find something that’s happening intermittently and you have no idea where it’s happening because you’re testing everything and there’s environmental issues. Just save yourselves the headache, break it down into smaller concerns is my recommendation. And when it comes to the end test, this is a good test but if everything has been well-tested in small segments up until that point, end to end testing should be as simple as the team getting together, trying it out end to end, making sure that it’s working well and that should work all right.

Audience Question: What percent code coverage do you strive for in your team for your automated tests?

Trish Khoo, Google: That’s a contentious one. I think it very much depends on the type of application you’re building, the way it lends itself to unit testing, because usually it’s something that’s measured in terms of unit testing. With integration testing, code coverage becomes a bit of a hairy thing because it’s usually you’re trying to get more coverage at a behavioural level and code wide coverage becomes more of a hazy signal for you. I would say in general, code coverage I think – I don’t even want to put a number on it, because I’ll be in trouble. But I would say, one thing I would say about code coverage is that for a team that’s not doing a lot of unit testing, at the very least giving some incremental code coverage goal is good, which if you can measure the lines of code covered by tests like the lines of new code that’s been added and how much of that is covered by tests is a nice way to start getting interim achievements for a team that’s new to unit testing, to try to strive to hit an absolute goal, whatever that may be. But it very strongly depends.

Audience Question: So the question that I have for you is I’m wondering if there is any difference or what are the differences to your process in testing to say when you’re releasing the cloud you can release multiple times per day versus the process where you release apps and you have to deliberately spaced out releases.

Trish Khoo, Google: Yeah, that’s really interesting and something that I found particularly on our web app teams versus our mobile teams. A lot of mobile products, they have a limitation, even on the amount of times you can release, based on the amount of time that it will still take the Apple/Play Store to take your submission so you do have a hard line there. The important thing is that even if you’re not releasing to production as frequently, it’s important to have things that are releasable on a frequent basis. So at least your team is checking them out, you’ve got automated tests running on them and it’s important to keep that quality bar even if you don’t have the thread hanging over your head, oh it’s going to production today.

Audience Question: Hey, Trish! So I’m working on moving to a combined engineering approach at the moment and we currently have software engineers and testers embedded in the same team, mostly manual testing around product entry teams. And one of the worries is that if we don’t take the testers out, then they will be a kind of recidivist approach. If we leave them in there, nothing will change. And coupled with that we are wondering what to do about things like test infrastructure and best practices and especially testing like performance or security testing. And so we’re thinking about having, pulling the best out and having a separate QA team to make that change. Would you not recommend that and is that not the approach that’s worked?

Trish Khoo, Google: So just to clarify, you’re thinking of having a separate QA team to start looking at performance testing –

Audience Question: To cover the stuff we just can’t do in team. So it’s dangerous if we leave the testers in the team, then nothing will change and we also have the second we need to cover stuff which is about who is gonna manage the test infrastructure, who’s gonna bring in best practice, who’s gonna do stuff like performance which is not needed by all teams, just a few and it needs to be done by specialists.

Trish Khoo, Google: Right. So if you have – so this is like where I think our testing experts such as the software engineers play a big part in the infrastructure side. It’s important to get people that know about that in particular. We kind of course make the assumption that everybody who is in testing knows absolutely everything about testing or infrastructure for that matter. So if somebody has the skills for that, then yeah. Definitely! It’s a good idea to use that person to be creating infrastructure for you.

Audience Question: Particularly when they sit. I recommend strongly against pulling all those people into a QA team cause you’re obviously keeping them embedded with the teams, we don’t really have that option and the danger is that it won’t change if we keep them in the teams.

Trish Khoo, Google: It sounds like you’re changing the definition of the work that they’re doing. Because it sounds more like what you want to do is not have these people doing manual testing on behalf of the developers anymore, but do you want to change the nature of their work to be focused more on providing tools and infrastructure? Which I think is a great goal and I think the situation you’ve got is a tricky one, because first of all it’s changing the expectation of one group of people and saying your job is now this, not the one you’ve been doing every day, but it’s something different. But also, the developers are going to still be treating them like you’re testing all my work now. Yeah, that’s tricky and it needs to be – I think you can’t get away with it without making a mass of deal about it and saying everything is changing now, this is going to be different. They’re not manually testing your work anymore, but I think from experience, the worst thing to do is say you’re not doing this and they’re not doing this so you have to do this, it really has to be from an approach of this is going to help and this person is working on this bigger, better infrastructure project and this is going to make the team more effective and because they’re doing this they don’t have time to do this manual testing anymore. So this is something that the development team is going to take on and somebody is going to provide help for that development team to learn how to do this better so it’s not just a matter of dropping people into this world all of a sudden.

Audience Question: Hello! Thank you! We totally drink the devs test and all the things Kool-Aid, we found that there are some of the things that are difficult to test on automatically that we do by hand so on IE6 does the CSS class get applied correctly. Not IE for anybody listening! And right now we’re doing that, we’re very small and trying to figure out how we scale that, do we go offshore, do we find somebody who – right now we’ve got iPads and iPhones and Androids spread across everybody’s desk and that doesn’t seem to scale. And so I’m curious how has Google solved that problem?

Trish Khoo, Google: It sounds like you’re talking about visual testing. Yeah, that is a tricky one.

Audience Question: Something you can’t automate?

Trish Khoo, Google: Actually you can. There are some very interesting tools out there and a lot of them are publicly available actually. Looking to things like Apium for instance, sourcelabs provides a lot of things in the web app space, Apium is also for mobile and there are some really great tools in terms of screen diffing so comparing one golden screen against one screen. Even if this is not – even if this causes problems in terms of slight differentiations, sometimes you can tweak these tools so they are doing it within a certain percentage so 97% correct so that’s possible just to – there’s a lot of really great tools coming out and I know mobile is still one of the most difficult things to test across platforms, but the technology is slowly keeping up.

Audience Question: If you have developers who aren’t used to testing, do you have any resources that could go and point them to, to learn on how to train better?

Trish Khoo, Google: Oh, it actually entirely depends on the platform and the language and tools that they’re using because every single one is going to take a different approach, for general kind of testing needs, yeah. I think you really just have to find the one that’s appropriate to that. If you do any kind of search for TTD, that’s not a bad start, but –

Audience Question: I meant less tools and methodology because I find that when people do – everyone can learn how to do a unit test, but for developers, I’ve worked with a lot of them which were really bad unit tests because they’re not thinking to the edge cases, so I’ve started giving them like a checklist. You have to always check 1 and 0. And there’s just different mentalities that a tester will have that developers don’t for testing those edge cases to make sure it’s well tested and I was just wondering if there are resources out there to say this is what you need to learn from a mentality perspective. Not a total perspective.

Trish Khoo, Google: I actually have the perfect book for you. It’s called explore it by Elizabeth Hendrickson who is QA director at Pivotal Labs and she has written a book that is specifically designed for developers to teach them exploratory testing practices which teaches developers how to think the way that expert testers do, to be able to find these edge cases and to be able to mentally model the system in their heads to be able to find the complexity of the parts.

Audience Question: Thank you for this talk, by the way. It’s excellent! So one thing coming into testing in TDD and test driven development, coming into it, having not worked on that discipline, one thing that I never realised outside of the CYA mentality, making sure that everything works and is fine, nothing is broken. One thing I’ve learned is that there are additional benefits to it and things like software engineering and thinking through your problem and figuring out when issues are difficult, if something is really hard to test, that means that it’s – you’re probably doing it the wrong way. It could be done easier. Are there other things and benefits that you’ve seen that you can talk about with regards to testing and TDD that are outside of just hey, our code works?

Trish Khoo, Google: Yeah, absolutely! You’ve hit the nail on the head there! usually what I hear most from TDD practitioners is that it’s more about design than it is about testing. Writing their tests makes them think about what it is they’re building, what the expectations are and how they’re going to build it upfront. It forces developers to build their product in a testable way so that this makes it easier to write in the future and this is avoiding a lot of refactoring headaches later on. In addition, it also serves as documentation. So if anybody was wondering what this code was ever meant to do for down the line when you get new developers, then this is more of a plain text kind of way to describe this was meant to do this – this behaviour was working this way because of user expectations and this kind of thing. It sets the expectations for the code and the tester at the same time. It’s very efficient that way!

Mark Littlewood: And I think that means we’re out of time. Let’s say thank you very much, Trish! Well done!

Find out more about BoS

Get details about our next conference, subscribe to our newsletter, and watch more of the great BoS Talks you hear so much about.

Trish Khoo

Trish Khoo manages a testing and infrastructure engineering team at Google. Her team provides support for the Google Maps engineering organization responsible for data curation.

Trish’s curiosity and passion for software development and testing has led her career to big corporations, small businesses, product development, consulting, public sector and government organizations. She has led test engineering teams for companies like Campaign Monitor, Salmat and Microsoft in Australia, UK and USA. Trish speaks often at tech conferences about test automation and healthy test practices. Most recently, she was the keynote speaker for CAST 2014.

Learn how great SaaS & software companies are run

We produce exceptional conferences & content that will help you build better products & companies.

Join our friendly list for event updates, ideas & inspiration.